Our laptops and phones are almost miraculously powerful. How did computers come so far in such a short period of time?

Illustration by Chehehe

Sampling Eras is a column by Rubee Dano that explores the intersection of science and history. Rubee is a history and philosophy student, with a particular interest in the history of science and academia.

Historically, computers could be said to be a bit of an enigma. Today, our mobile phones rarely leave our hands, most laptops are capable of running fairly intensive programs, and in scientific settings, computers can run calculations we couldn’t even begin to tackle ourselves.

One suggested explanation for the suddenness of developments in computing is one of the fathers of computing, Alan Turing himself. University of Birmingham mathematician David Craven described Turing’s work as paving the way for his contemporaries to ask questions about computing and its limitations that were not possible before he laid the groundwork. According to Craven, Turing’s ideas were radical yet intuitive, and still useful to this day—but are they why modern computing has come so far?

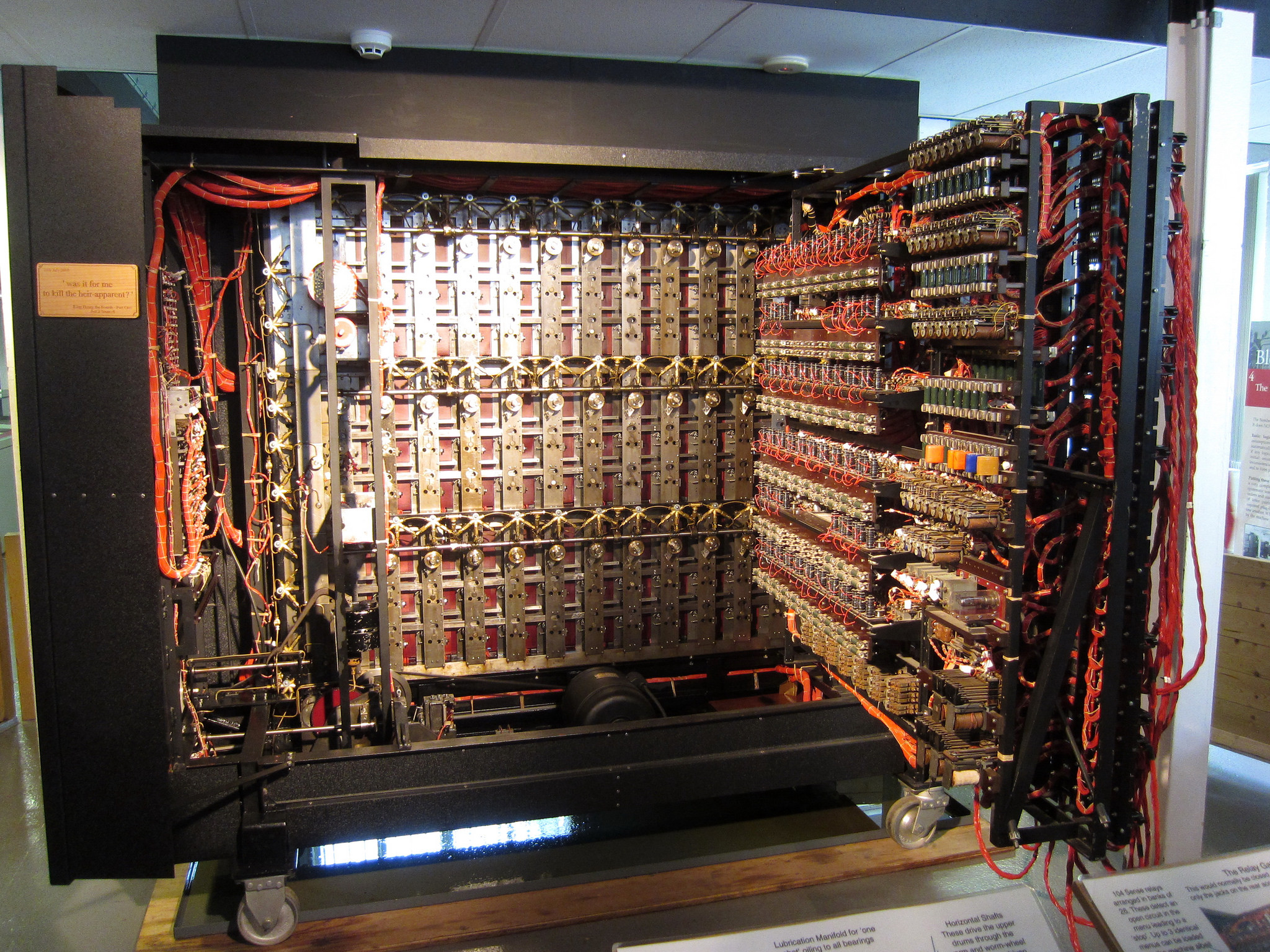

The Turing Machine at Bletchley Park was a giant piece of technology, but very basic by today's standards. Douglas Hoyt/Flickr (CC BY-NC-ND 2.0)

Konrad Zuse’s Z1 and Alan Turing’s Turing Machine became what would later be known as the foundation of modern computing. Both machines acted as basic programmable computers; the Z1 operated on Boolean logic and used binary floating point numbers, and the Turing Machine used basic data storage and symbol manipulation. While both of these were large steps forward for computing technology in the 1930s and ‘40s, there’s no arguing that today’s computers are vastly more complex. How did such a huge leap occur in only 80 years? How is it that we’ve gone from the Turing Machine to something that can fit in your hand, all in less than a century? The explanation might be Moore’s Law.

50 years ago, Gordon Moore, one of the founders of tech giant Intel, observed that the number of transistors in a chip approximately doubles every two years. This observation, now considered an informal ‘law’, means that every 24 months we double the amount of processing power in our computers by advancing the state of our hardware. This is impressive, as mathematicians Jonathan Borwein and David H. Bailey have noted: imagine the speed of commercial airliners following a similar trend—planes would have been travelling close to the speed of light years ago.

But how is hardware directly related to the processing power of computers? Alex Keech, a developer for a Melbourne-based tech start-up, said “the main advance in computer hardware for a long time has been increasing the number of transistors.” Transistors are what allow computers to perform functions: complicated commands are broken down into binary operations, which are executed by transistors. “Essentially,” Keech explained, “the number of transistors represents the number of active modes a computer can have active at one point, and so — ignoring diminishing returns — doubling the number of transistors doubles the number of actions a computer can perform at any given time”.

“On a hardware level, broadly speaking, not much else has changed,” said Keech. New software has allowed us to use the resources we have better, but the hardware is simply doubling in its ability, and that’s why it’s advancing so fast.

Andrea Morello describes Moore’s Law in relation to the structure of transistors, and how they have to decrease in size to accommodate the increase in number every 18-24 months as dictated by Moore’s Law.

What mostly changes in new model of computer is the size of transistors. For example, the mid-2013 “Jaguar” CPU by AMD, a leading computer hardware company, had 23nm transistors, but their latest release, expected in 2017, will have 14nm transistors. In recent times, it is this decrease in the size of transistors that allows for Moore’s Law to continue, because twice as many transistors can fit into the same computer parts. “Making chips larger, while possible, is generally avoided to costs of development and manufacturing, as well as technical limitations in getting transistors spaced further apart to talk to one another quickly,” said Keech. “We put more stuff in there by making it smaller and smaller.”

However, it appears Moore’s Law is finally beginning to slow down. “Even doubling number of transistors doesn’t result in doubling of speed anymore because there’s other stuff going on,” said Keech. In recent years, the doubling has been taking longer than the predicted two years. “Indeed,” Keech said, “there is increasing evidence that Moore’s Law will not be able to sustain itself in the future. At some point, you can’t reduce the size of the transistor any further and still have it do what it is required to do.” Keech added: “Traditionally, decreasing transistor size has resulted in increased power efficiency and clock speed, though this trend seems to be coming to an end also.”

Quantum computing represents the first fundamental change in computer hardware since the time of Zuse and Turing. Computers operating on binary only have two possible states at a given transistor: 0 or 1; on or off. Quantum computing allows for multiple, parallel combinations instead, which increases the number of possible combinations per transistor, hence increasing the number of available functions.

Quantum computing works by exploiting quantum entanglement, meaning the equivalent of a transistor can both be on and off at the same time, much like Schrodinger’s famed cat.

Current computer processors fall into two main categories: graphics processing units (GPUs), which use many thousands of small cores, and central processing units (CPUs), which use 8 or fewer larger cores. The advantage of CPUs is that they do smaller tasks very quickly and are more agile at switching between tasks, however a GPU will tackle more repetitive tasks faster than a CPU will. “If you give it a thousand small tasks, a CPU will be better, based on how they process tasks,” Keech said. “A graphics card, while mainly used to run games, are also good at heavy number crunching, and lend themselves well to complex organic chemistry calculations or physics in a research setting. CPUs are utilised in computers such as in regular laptops, phones, and tablets, because users want fast-response.” Quantum computing is the next likely step in fields that currently utilise GPUs.

Not a lot of computer scientists these days are concerned with the hardware side of computing — instead, the focus of computing degrees tends to be on software. Quantum computing, for example, is a field that has been largely developed by physicists. The current challenge around quantum computing is coming up with the hardware, although the Centre for Quantum Computation and Communication Technology is making strides, potentially using either photons or the electrons of phosphorus atoms within a silicon chip to make the technology possible. University of New South Wales quantum physicist Andrea Morello has cited silicon chips as potentially paving the way for quantum computing to flourish, due to their capabilities for storage.

Morello described quantum computing in another article as already surpassing CPUs and GPUs at cracking encryptions and searching unsorted databases — however, the most complex calculation completed using a quantum computer has only been ‘3x5 = 15.’ However, this is not indicative of how far quantum computers behind old-school computers, it simply proves we can do these kinds of calculations with them. Now, all this doesn’t necessarily mean that quantum computers will replace the current hardware; it’s more likely they will work alongside regular computers. The advantage of quantum computers lies in attempting computations that are easy to solve with their unique capabilities. But because these types of problems aren’t the only ones we need to solve, quantum computers won’t replace what we know as computers today, even if Moore’s law does cease to move forward.

Quantum computers essentially represent a change in how we build transistors, and how this affects developments in computing. The number of transistors in classical computers, however, is largely why computing has come as far as it has so quickly. Other hardware elements like RAM and disk storage allow us to store more information and make use of the operations transistors allow us to do, but most of the legwork is done by the processing centres of the computer, hence the importance of transistors. Because of Moore’s Law and the approximate doubling of the number of transistors per chip for the most part of the last 80 years, it’s fairly easy to see how we went from the Turing Machine to today.

Edited by Jack Scanlan